When I demoed Multi-region Cassandra on GKE at the Kubecon ’21 show floor, I was surprised to see so much interest in the topic. Running a K8ssandra cluster on GKE using a cass-operator that was installed with Helm in itself is impressive – it enables Day 2 operations such as backup/restore and repair. And in conjunction with the self-healing properties of Kubernetes, it significantly helps in productionizing a database on Kubernetes.

However, the multi-cluster/multi-region approach seemed more appropriate for Kubecon. This article goes into the rationale of introducing an additional K8ssandra-operator that supplements the cass-operator to enable multi-cluster installs and operations. Although I had a working version of the K8sssandra-operator before Kubecon, it was way too early to build a cloud-based demo around it.

As we’re going to see, the most challenging aspects were setting up the networking and once that was done, it was a simple matter of injecting the seeds from one Cassandra cluster (or referred to as a data center) into the other cluster. If you’re using the K8ssandra Operator, it will handle this automatically across clusters. With the networking set up properly, the masterless Cassandra pods in the K8ssandra cluster will communicate with its peers and establish a larger cluster across multi-regions.

For GKE it’s pretty straightforward to set up the “networking handshake” by explicitly creating a subnet in the 2nd region as part of the same network created for the first datacenter as outlined in the blog.

Next was AWS:Reinvent. Since AWS:Reinvent was closely on the heels of Kubecon and realizing that setting a multi-region cluster on Kubernetes was generating a lot of interest (and the same GKE demonstration would not fly amongst AWS fans), I turned my attention from GKE to AWS and EKS.

Enter kubefed and specifically EKS Kubefed. A quick overview and distinction between the projects follows in the next section.

EKS Kubefed

Kubernetes federation (kubefed) is a generic approach for multi-cluster Kubernetes – being able to automatically detect failures, self heal, etc. across multiple clusters.

EKS Kubefed, based on kubefed, on the other hand is intended for EKS clusters and in particular deals with AWS networking. The project is maintained by AWS labs and is intended for apps that need HA, resiliency and automatic scaling. It’s an opinionated implementation, but it fit my purposes of creating a repeatable multi-region Cassandra demo on EKS.

It’s worth mentioning that both the projects are in their infancy and probably not ready for production use (yet).

Ideally, I would have liked a generic solution for multiple clouds, but given that I am not a networking expert and the fact that AWS networking is significantly different and harder than GCP, I resorted to the next best alternative of trying to build something repeatable. This solution, preferably scripting-based (with some minimal customization) would setup the essential networking ingredients including:

- VPCs

- VPC peering

- routing, security groups for the communication between the VPCs

Optionally, if Kubernetes clusters can be installed in the respective VPCs with the networking already taken care of, it would help me to setup and tear down the infrastructure on demand since I anticipated having to do this a few times before I got it right.

EKS Kubefed does pretty much all this. As explained in the EKS Kubefed docs it does the following.

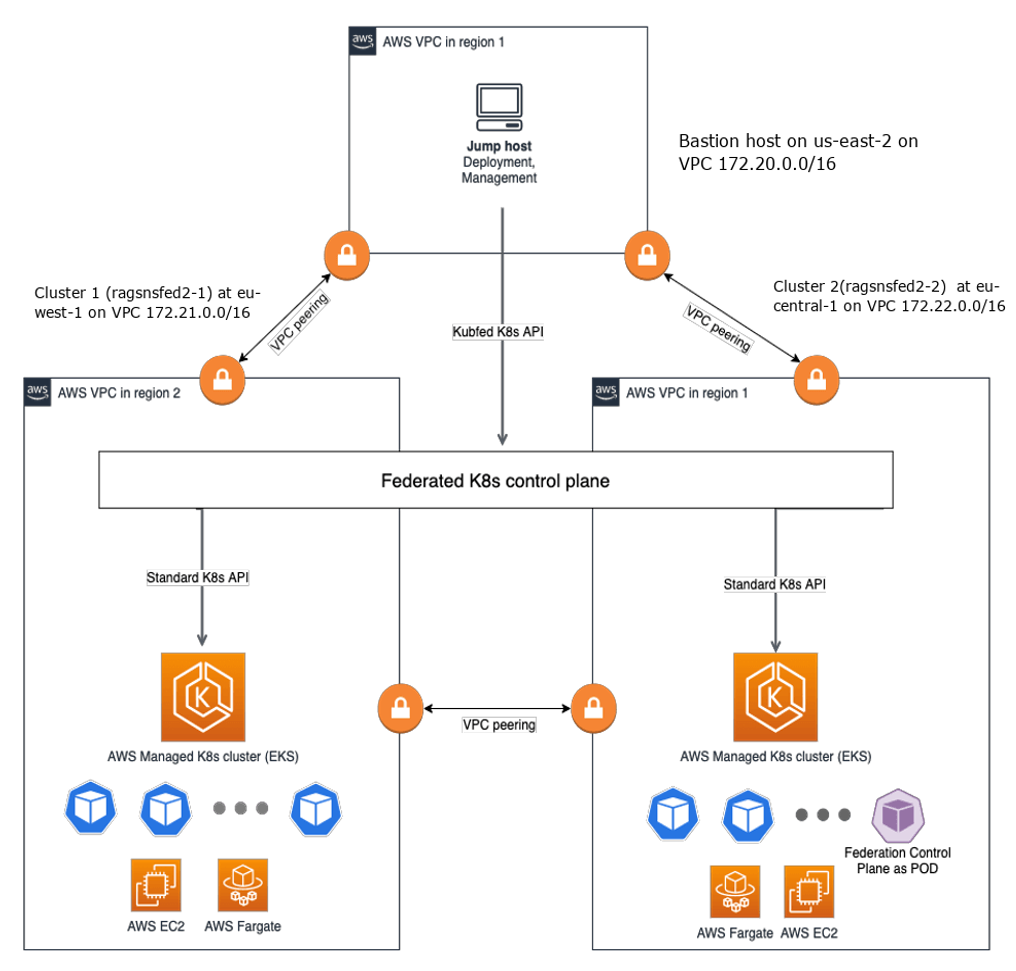

As a first step, the solution template deploys a jump/bastion host in new VPC and provisions all of the necessary resources, including:

- VPC

- Public and private subnets

- NAT Gateway

- Internet Gateway

- EC2 for jump host

Next, via the the jump host it executes the deployment script, and deploys the following items:

- Two VPCs for EKS clusters in selected regions.

- Two AWS EKS clusters in different regions, each in its own VPC without distance limitation.

- VPC peering between three VPCs for secure communication between jump host and Federation control panel with all federated Amazon EKS clusters.

- Federation control panel based on KubeFed is a regular pod in one of AWS EKS clusters and act as proxy between Kubernetes administrator and all deployed AWS EKS clusters.

I did some minor customization as you’ll see next. Most of this customization is captured in the forked repository I created for this demo.

Technical details

Installing EKS Kubefed

I customized the size of the respective clusters (up from two to three) but left the regions, VPCs, IP CIDR, and ranges intact. My goal was to be able to peer the two Cassandra data centers as you will see below.

As a result of installing EKS Kubefed, I had the jump/bastion host on us-east-2. I customized kubefed to increase the size of clusters from two to three, and as a result, ended with clusters on eu-west-1 and eu-central-1. Figure 1 shows the architecture diagram where I superimposed the instance specifics for a better understanding of the install.

Below, I have provided a walkthrough of the clusters from a Kubernetes/K8ssandra perspective.

root@ip-172-20-3-37 ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* ragsnsfed2-1 ragsnsfed2-1 ragsnsfed2-1

ragsnsfed2-2 ragsnsfed2-2 ragsnsfed2-2

Cluster 1 (ragsnsfed2-1) was created on eu-west-1 and the nodes and pods were assigned with a VPC of 172.21.0.0/16 as shown below.

[root@ip-172-20-3-37 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSIONCONTAINER-RUNTIME

ip-172-21-14-112.eu-west-1.compute.internal Ready <none> 50d v1.20.11-eks-f17b81 172.21.14.112 54.74.97.131 Amazon Linux 2 5.4.156-83.273.amzn2.x86_64docker://20.10.7

ip-172-21-47-214.eu-west-1.compute.internal Ready <none> 50d v1.20.11-eks-f17b81 172.21.47.214 63.35.220.26 Amazon Linux 2 5.4.156-83.273.amzn2.x86_64docker://20.10.7

ip-172-21-85-211.eu-west-1.compute.internal Ready <none> 50d v1.20.11-eks-f17b81 172.21.85.211 34.245.154.168 Amazon Linux 2 5.4.156-83.273.amzn2.x86_64docker://20.10.7

[root@ip-172-20-3-37 ~]# kubectl describe nodes | grep -i topology

topology.kubernetes.io/region=eu-west-1

topology.kubernetes.io/zone=eu-west-1a

topology.kubernetes.io/region=eu-west-1

topology.kubernetes.io/zone=eu-west-1b

topology.kubernetes.io/region=eu-west-1

topology.kubernetes.io/zone=eu-west-1c

The following output shows pods were assigned with a VPC of 172.21.0.0/16 on eu-west-1. They include the cass-operator, Grafana, Prometheus, Reaper and the Cassandra pods themselves (referred to as Data Centers).

[root@ip-172-20-3-37 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATEDNODE READINESS GATES k8ssandra-cass-operator-8675f58b89-hm7cr 1/1 Running 0 60d 172.21.35.157 ip-172-21-47-214.eu-west-1.compute.internal <none> <none> k8ssandra-crd-upgrader-job-k8ssandra-cqsfd 0/1 Completed 0 61d 172.21.37.143 ip-172-21-47-214.eu-west-1.compute.internal <none> <none> k8ssandra-grafana-5cd84ccb6c-694mx 2/2 Running 0 60d 172.21.16.35 ip-172-21-14-112.eu-west-1.compute.internal <none> <none> k8ssandra-kube-prometheus-operator-bf8799b57-bdxrg 1/1 Running 0 60d 172.21.90.33 ip-172-21-85-211.eu-west-1.compute.internal <none> <none> k8ssandra-reaper-operator-5d9d5d975d-zrb97 1/1 Running 1 60d 172.21.46.28 ip-172-21-47-214.eu-west-1.compute.internal <none> <none> multi-region-dc1-rack1-sts-0 2/2 Running 0 60d 172.21.21.247 ip-172-21-14-112.eu-west-1.compute.internal <none> <none> multi-region-dc1-rack2-sts-0 2/2 Running 0 60d 172.21.51.70 ip-172-21-47-214.eu-west-1.compute.internal <none> <none> multi-region-dc1-rack3-sts-0 2/2 Running 0 60d 172.21.79.159 ip-172-21-85-211.eu-west-1.compute.internal <none> <none> prometheus-k8ssandra-kube-prometheus-prometheus-0 2/2 Running 0 60d 172.21.69.245 ip-172-21-85-211.eu-west-1.compute.internal <none> <none>

The following output shows the services that were spun up on eu-west-1 by the cass-operator. They include Grafana, Prometheus, Reaper and the DC service and the seeds service, which we used to join this cluster with the other cluster at eu-central-1:

[root@ip-172-20-3-37 ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE k8ssandra-grafana ClusterIP 10.100.230.249 <none> 80/TCP 60d k8ssandra-kube-prometheus-operator ClusterIP 10.100.43.142 <none> 443/TCP 60d k8ssandra-kube-prometheus-prometheus ClusterIP 10.100.106.70 <none> 9090/TCP 60d k8ssandra-reaper-reaper-service ClusterIP 10.100.183.186 <none> 8080/TCP 60d kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 61d multi-region-dc1-additional-seed-service ClusterIP None <none> <none> 60d multi-region-dc1-all-pods-service ClusterIP None <none> 9042/TCP,8080/TCP,9103/TCP 60d multi-region-dc1-service ClusterIP None <none> 9042/TCP,9142/TCP,8080/TCP,9103/TCP,9160/TCP 60d multi-region-seed-service ClusterIP None <none> <none> 60d prometheus-operated ClusterIP None <none> 9090/TCP 60d [root@ip-172-20-3-37 ~]# kubectl get svc -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR k8ssandra-grafana ClusterIP 10.100.230.249 <none> 80/TCP 60d app.kubernetes.io/instance=k8ssandra,app.kubernetes.io/name=grafana k8ssandra-kube-prometheus-operator ClusterIP 10.100.43.142 <none> 443/TCP 60d app=kube-prometheus-stack-operator,release=k8ssandra k8ssandra-kube-prometheus-prometheus ClusterIP 10.100.106.70 <none> 9090/TCP 60d app.kubernetes.io/name=prometheus,prometheus=k8ssandra-kube-prometheus-prometheus k8ssandra-reaper-reaper-service ClusterIP 10.100.183.186 <none> 8080/TCP 60d app.kubernetes.io/managed-by=reaper-operator,reaper.cassandra-reaper.io/reaper=k8ssandra-reaper kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 61d <none> multi-region-dc1-additional-seed-service ClusterIP None <none> <none> 60d <none> multi-region-dc1-all-pods-service ClusterIP None <none> 9042/TCP,8080/TCP,9103/TCP 60d cassandra.datastax.com/cluster=multi-region,cassandra.datastax.com/datacenter=dc1 multi-region-dc1-service ClusterIP None <none> 9042/TCP,9142/TCP,8080/TCP,9103/TCP,9160/TCP 60d cassandra.datastax.com/cluster=multi-region,cassandra.datastax.com/datacenter=dc1 multi-region-seed-service ClusterIP None <none> <none> 60d cassandra.datastax.com/cluster=multi-region,cassandra.datastax.com/seed-node=true prometheus-operated ClusterIP None <none> 9090/TCP 60d app.kubernetes.io/name=prometheus

Cluster 2 (ragsnsfed2-2) was created on eu-central-1 and the nodes and pods were assigned with a VPC of 172.22.0.0/16 as shown below.

[root@ip-172-20-3-37 ~]# kubectl config use-context ragsnsfed2-2

Switched to context "ragsnsfed2-2".

[root@ip-172-20-3-37 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ip-172-22-41-164.eu-central-1.compute.internal Ready <none> 50d v1.20.11-eks-f17b81 172.22.41.164 54.93.229.91 Amazon Linux 2 5.4.156-83.273.amzn2.x86_64 docker://20.10.7

ip-172-22-8-249.eu-central-1.compute.internal Ready <none> 50d v1.20.11-eks-f17b81 172.22.8.249 3.121.162.253 Amazon Linux 2 5.4.156-83.273.amzn2.x86_64 docker://20.10.7

ip-172-22-91-211.eu-central-1.compute.internal Ready <none> 50d v1.20.11-eks-f17b81 172.22.91.211 18.197.146.68 Amazon Linux 2 5.4.156-83.273.amzn2.x86_64 docker://20.10.7

[root@ip-172-20-3-37 ~]# kubectl describe nodes | grep -i topology

topology.kubernetes.io/region=eu-central-1

topology.kubernetes.io/zone=eu-central-1b

topology.kubernetes.io/region=eu-central-1

topology.kubernetes.io/zone=eu-central-1a

topology.kubernetes.io/region=eu-central-1

topology.kubernetes.io/zone=eu-central-1c

[root@ip-172-20-3-37 ~]#

Below, you’ll see an output similar to what we saw earlier for pods and services, which were assigned with a VPC of 172.22.0.0/16 on eu-central-1:

[root@ip-172-20-3-37 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESk8ssandra-cass-operator-8675f58b89-kc542 1/1 Running 3 60d 172.22.66.48 ip-172-22-91-211.eu-central-1.compute.internal <none> <none>k8ssandra-crd-upgrader-job-k8ssandra-4zq94 0/1 Completed 0 60d 172.22.28.205 ip-172-22-8-249.eu-central-1.compute.internal <none> <none>k8ssandra-grafana-5cd84ccb6c-dcq5h 2/2 Running 0 60d 172.22.37.244 ip-172-22-41-164.eu-central-1.compute.internal <none> <none> k8ssandra-kube-prometheus-operator-bf8799b57-z7t2c 1/1 Running 0 60d 172.22.75.254 ip-172-22-91-211.eu-central-1.compute.internal <none> <none> k8ssandra-reaper-74d796695b-5sprx 1/1 Running 0 60d 172.22.75.234 ip-172-22-91-211.eu-central-1.compute.internal <none> <none> k8ssandra-reaper-operator-5d9d5d975d-xfcp8 1/1 Running 3 60d 172.22.28.205 ip-172-22-8-249.eu-central-1.compute.internal <none> <none> multi-region-dc2-rack1-sts-0 2/2 Running 0 60d 172.22.1.122 ip-172-22-8-249.eu-central-1.compute.internal <none> <none> multi-region-dc2-rack2-sts-0 2/2 Running 0 60d 172.22.34.66 ip-172-22-41-164.eu-central-1.compute.internal <none> <none> multi-region-dc2-rack3-sts-0 2/2 Running 0 60d 172.22.77.73 ip-172-22-91-211.eu-central-1.compute.internal <none> <none> prometheus-k8ssandra-kube-prometheus-prometheus-0 2/2 Running 0 60d 172.22.15.157 ip-172-22-8-249.eu-central-1.compute.internal <none> <none> [root@ip-172-20-3-37 ~]# kubectl get svc -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR k8ssandra-grafana ClusterIP 10.100.176.248 <none> 80/TCP 60d app.kubernetes.io/instance=k8ssandra,app.kubernetes.io/name=grafanak8ssandra-kube-prometheus-operator ClusterIP 10.100.244.27 <none> 443/TCP 60d app=kube-prometheus-stack-operator,release=k8ssandrak8ssandra-kube-prometheus-prometheus ClusterIP 10.100.178.211 <none> 9090/TCP 60d app.kubernetes.io/name=prometheus,prometheus=k8ssandra-kube-prometheus-prometheusk8ssandra-reaper-reaper-service ClusterIP 10.100.117.74 <none> 8080/TCP 60d app.kubernetes.io/managed-by=reaper-operator,reaper.cassandra-reaper.io/reaper=k8ssandra-reaperkubernetes ClusterIP 10.100.0.1 <none> 443/TCP 61d <none> multi-region-dc2-additional-seed-service ClusterIP None <none> <none> 60d <none> multi-region-dc2-all-pods-service ClusterIP None <none> 9042/TCP,8080/TCP,9103/TCP 60d cassandra.datastax.com/cluster=multi-region,cassandra.datastax.com/datacenter=dc2multi-region-dc2-service ClusterIP None <none> 9042/TCP,9142/TCP,8080/TCP,9103/TCP,9160/TCP 60d cassandra.datastax.com/cluster=multi-region,cassandra.datastax.com/datacenter=dc2multi-region-seed-service ClusterIP None <none> <none> 60d cassandra.datastax.com/cluster=multi-region,cassandra.datastax.com/seed-node=trueprometheus-operated ClusterIP None <none> 9090/TCP 60d app.kubernetes.io/name=prometheus

As seen above the jump/bastion host was set up at 172.20.0.0/16 and the clusters at 172.21.0.0/16 and 172.22.0.0/16 respectively with the VPCs, VPC peering, and routing all enabled.

The nodegroup details for the clusters are shown below (it’s very similar for the other cluster as well).

Installing K8ssandra on the first cluster (eu-central-1):

I set up the secret (cassandra-admin-secret) for username and password on the first cluster as outlined in the blog. Secrets management on multi-clusters is another feature of the K8ssandra-operator.

For the first cluster (on eu-central) I used the values below to customize the installation. I used the K8ssandra docs to customize the installation, including setting topologies, and storage classes. Notice the topologies as the cluster is distributed on three different availability zones based on how the cluster was created:

cassandra: version: "4.0.1"

auth:

superuser: secret: cassandra-admin-secret cassandraLibDirVolume:

storageClass: local-path size: 5Gi clusterName: multi-region

datacenters:

- name: dc2

size: 3

racks:

- name: rack1

affinityLabels:

failure-domain.beta.kubernetes.io/zone: eu-central-1a

- name: rack2

affinityLabels:

failure-domain.beta.kubernetes.io/zone: eu-central-1b

- name: rack3

affinityLabels:

failure-domain.beta.kubernetes.io/zone: eu-central-1c

stargate:

enabled: false

replicas: 3

heapMB: 1024

cpuReqMillicores: 3000

cpuLimMillicores: 3000

I then installed K8ssandra with a helm install using the customized values above and procured the seeds as shown below:

kubectl get pods -o jsonpath="{.items[*].status.podIP}" --selector cassandra.datastax.com/seed-node=true

Here’s the output, which are the pod IP addresses:

172.22.1.122 172.22.34.66 172.22.77.73

I took these IP addresses and plugged them into the YAML file that was used to customize the second cluster as shown in the following section.

Installing K8ssandra on the second cluster (eu-west-1):

I set up the secret (cassandra-admin-secret) with the same values for username and password on the second cluster as well, again as outlined in the blog.

The customization YAML file for the second K8ssandra cluster based on the seeds from the first cluster (comma-separated here) is shown below. Notice the topologies and seeds:

cassandra:

version: "4.0.1"

auth:

superuser:

secret: cassandra-admin-secret

additionalSeeds: [ 172.22.1.122, 172.22.34.66, 172.22.77.73 ]

cassandraLibDirVolume:

storageClass: local-path

size: 5Gi

clusterName: multi-region

datacenters:

- name: dc1 size: 3

racks:

- name: rack1

affinityLabels: failure-domain.beta.kubernetes.io/zone: eu-west-1a

- name: rack2

affinityLabels:

failure-domain.beta.kubernetes.io/zone: eu-west-1b

- name: rack3

affinityLabels:

failure-domain.beta.kubernetes.io/zone: eu-west-1c

stargate: enabled: false

replicas: 3

heapMB: 1024

cpuReqMillicores: 3000

cpuLimMillicores: 3000

Again, I installed K8ssandra with a helm install using the customized values above. I had to tweak the security groups for the clusters to be able to communicate on port 9042 and once I did this, I was able to watch that the clusters were able to communicate with each other and form a larger multi-region cluster.

Verifying the larger cluster (eu-central-1 and eu-west-1):

I ran the following command to verify that the two clusters (or datacenters) were able to communicate with each other:

[root@ip-172-20-3-37 ~]# kubectl exec -it multi-region-dc1-rack1-sts-0 -c cassandra -- nodetool -u <cassandra-admin> -pw <cassandra-admin-password> status Datacenter: dc1 =============== Status=Up/Down |/ State=Normal/Leaving/Joining/Moving -- Address Load Tokens Owns (effective) Host ID Rack UN 172.21.21.247 141.61 KiB 16 0.0% b7d43122-7414-47c3-881a-3d0de27eabaa rack1 UN 172.21.51.70 139.86 KiB 16 0.0% a5dc020e-33ab-434d-8fe6-d93da82a7681 rack2 UN 172.21.79.159 140.57 KiB 16 0.0% 63681a4c-6f95-43cd-a53a-fe2c5a2d2555 rack3 Datacenter: dc2 =============== Status=Up/Down |/ State=Normal/Leaving/Joining/Moving -- Address Load Tokens Owns (effective) Host ID Rack UN 172.22.34.66 310.31 KiB 16 100.0% 0793a969-7f6b-4dcc-aa1a-8d9f758daada rack2 UN 172.22.1.122 299.49 KiB 16 100.0% e8318876-58fb-43e7-904d-f53d4b2541e1 rack1 UN 172.22.77.73 342.54 KiB 16 100.0% 774600d4-7c46-415f-9744-c2a55833ca6a rack3

The results show a multi-region cluster running on EKS with all the nodes up and normal as indicated by the “UN” status. QED!

Conclusions and next steps

As we saw, establishing a multi-region K8ssandra cluster is as simple as injecting the seeds from one into another. The networking details to enable the clusters to communicate is the hardest part and poses different challenges on each of the respective clouds.

In principle, as long as the networking with all the essential ingredients is enabled (VPCs, VPC peering, routing, etc.) Cassandra is able to “gossip” across clouds in a manner similar to intra-cloud (GKE, EKS, etc.) and establish a larger cluster.

In the future, I would like to establish something similar for multi-cloud, maybe between GKE and EKS. But that will have to wait for another day.

For now, I recommend following the K8ssandra-operator work on the K8ssandra community as it will automatically make a lot of your multi-cluster operations easier.

Let us know what you think about what we’ve shown you here by joining the K8ssandra Discord or K8ssandra Forum today.

Resources

- Multi-cluster Cassandra deployment with Google Kubernetes Engine

- K8ssandra Documentation: Install K8ssandra on GKE

- Kubernetes

- We Pushed Helm to the Limit, then Built a Kubernetes Operator

- Github Repo: K8ssandra Operator

- K8ssandra Documentation: Cass Operator

- K8ssandra Documentation: Install k8ssandra

- Federated Kubernetes Clusters Using Amazon EKS and Kubefed: Implementation Guide

- Github Repo: Kubernetes Cluster Federation (kubefed)

- Github Repo: Federated Amazon EKS Clusters on AWS (EKS Kubefed)

- Forked Repo: Federated Kubernetes Clusters Using Amazon EKS and KubeFed

- K8ssandra Documentation: Monitor Cassandra (Grafana and Prometheus)

- K8ssandra Documentation: Reaper for Cassandra Repairs